Trustworthy AI: Why It Matters and How We Get There

5 elements of future of networking, AI ROI and Impact, Trust gap, Clear frameworks and practical steps

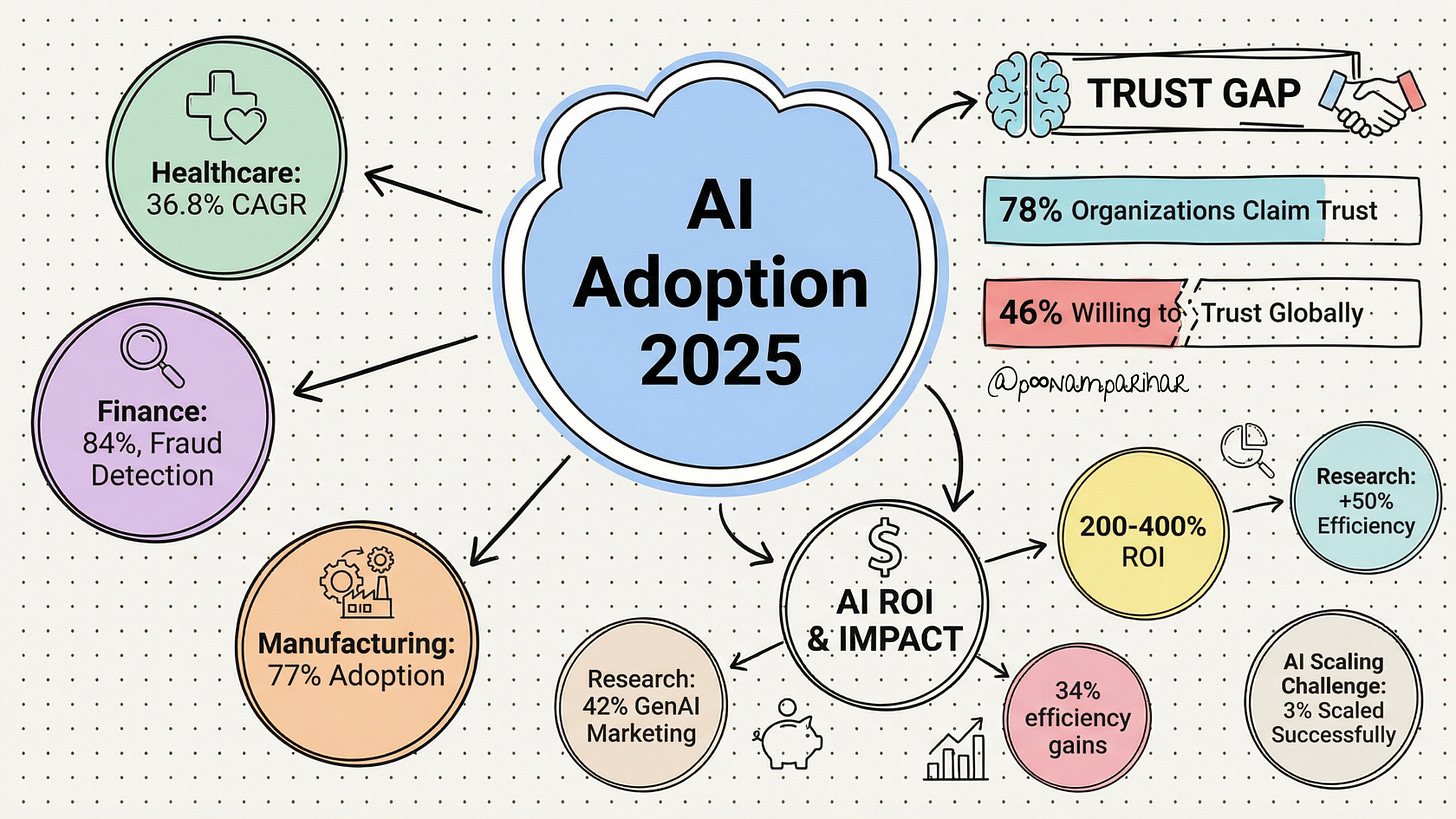

AI is everywhere - powering healthcare diagnostics, factory robots, financial analytics, and customer chatbots. But despite bold claims about “fully trusting” AI, most organizations struggle to use it at scale. So Why is that? Because trust is hard to earn and easy to lose. As AI increasingly influences decisions affecting our health, jobs, and finances, making it trustworthy is no longer optional, it’s essential.

Trustworthy AI in networking, which is my domain area of expertise, mean that decision-making algorithms are secure, transparent, and fair. It requires rigorous auditing, explainable insights, and bias detection to build confidence and reliability into automated operations. By embedding ethical and robust AI, networks can proactively defend against threats while adapting intelligently to ever-changing environments. Its not very different on the application side though.

TL;DR

AI adoption is skyrocketing across all major industries, but trust remains a massive barrier to realizing value.

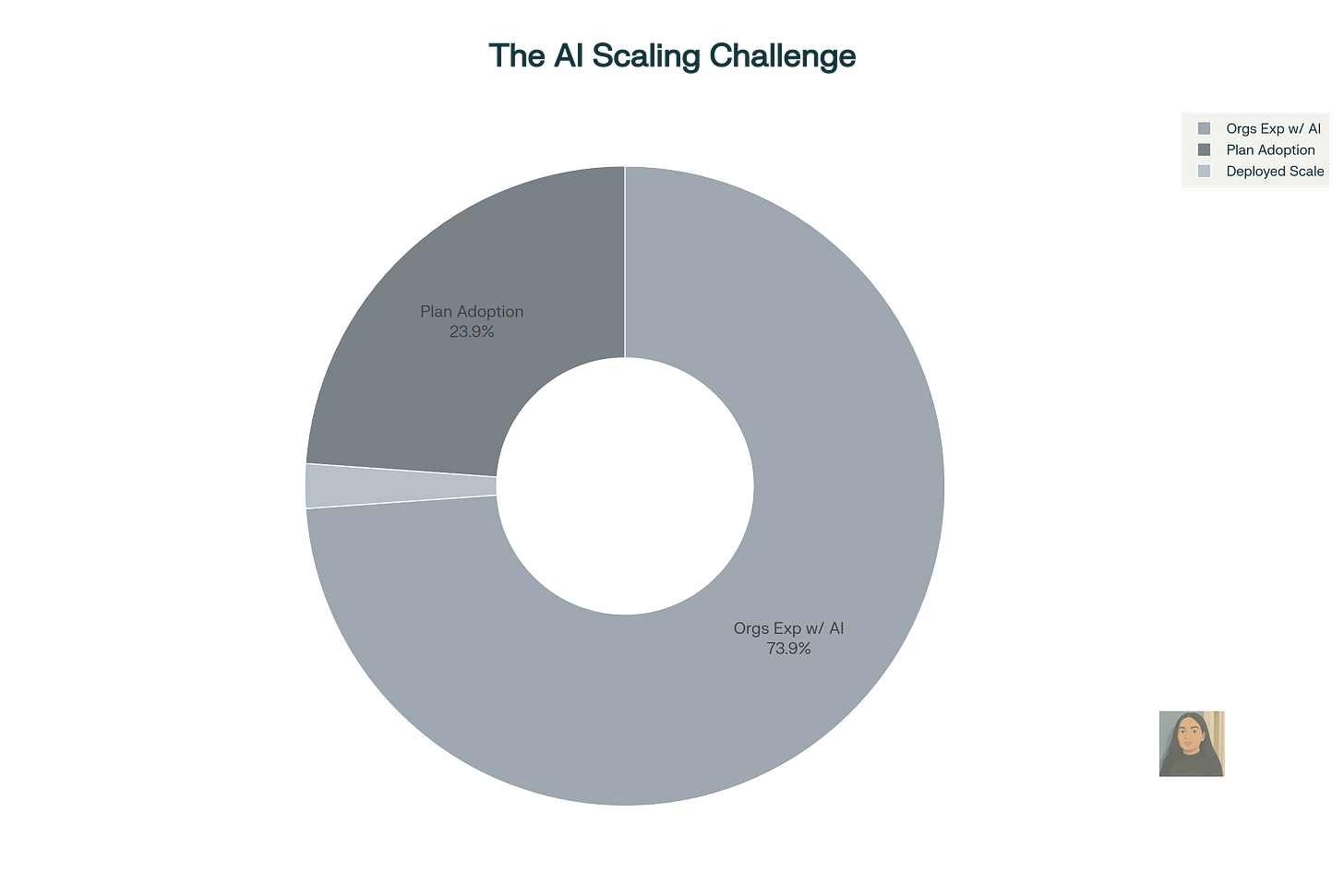

99% of companies experiment with AI, but only 3% scale it successfully and thats largely due to trust and governance gaps.

Trustworthy AI goes beyond technology: it covers fairness, transparency, accountability, safety, and privacy.

Companies prioritizing responsible AI see fewer failures, faster returns, and safer outcomes.

Clear frameworks and practical steps can help ensure your AI systems are trustworthy and truly pay off.

I believe there are discussions happening of responsible AI, but mostly from governance perspective, which is a very tiny part of it, and we’ll As I was looking at it from future of networking perspective, and since that might influence this post, even though I want to keep it generic, a little bit of background here.

The future of networking is an intelligent, adaptive, and automated environment where data, intent, and AI drive real-time decisions and operations. Networks dynamically respond to business needs, self-optimize, and integrate robust security at scale, and some of the foundational pillars of this transformation are open standards, automation, and trustworthy AI.

5 Elements of future of networking:

Data-Centric Networking

Network Automation

Intent-Driven Networks

Trustworthy AI

Knowledge Plane

Why Trustworthy AI Matters

Financial Risks: 64% of enterprises lost over $1 million to AI failures last year, mostly from ungoverned or misunderstood AI deployments.

Scaling Failure: While 99% are using or experimenting with AI, only 3% report success at deploying it widely in their business.

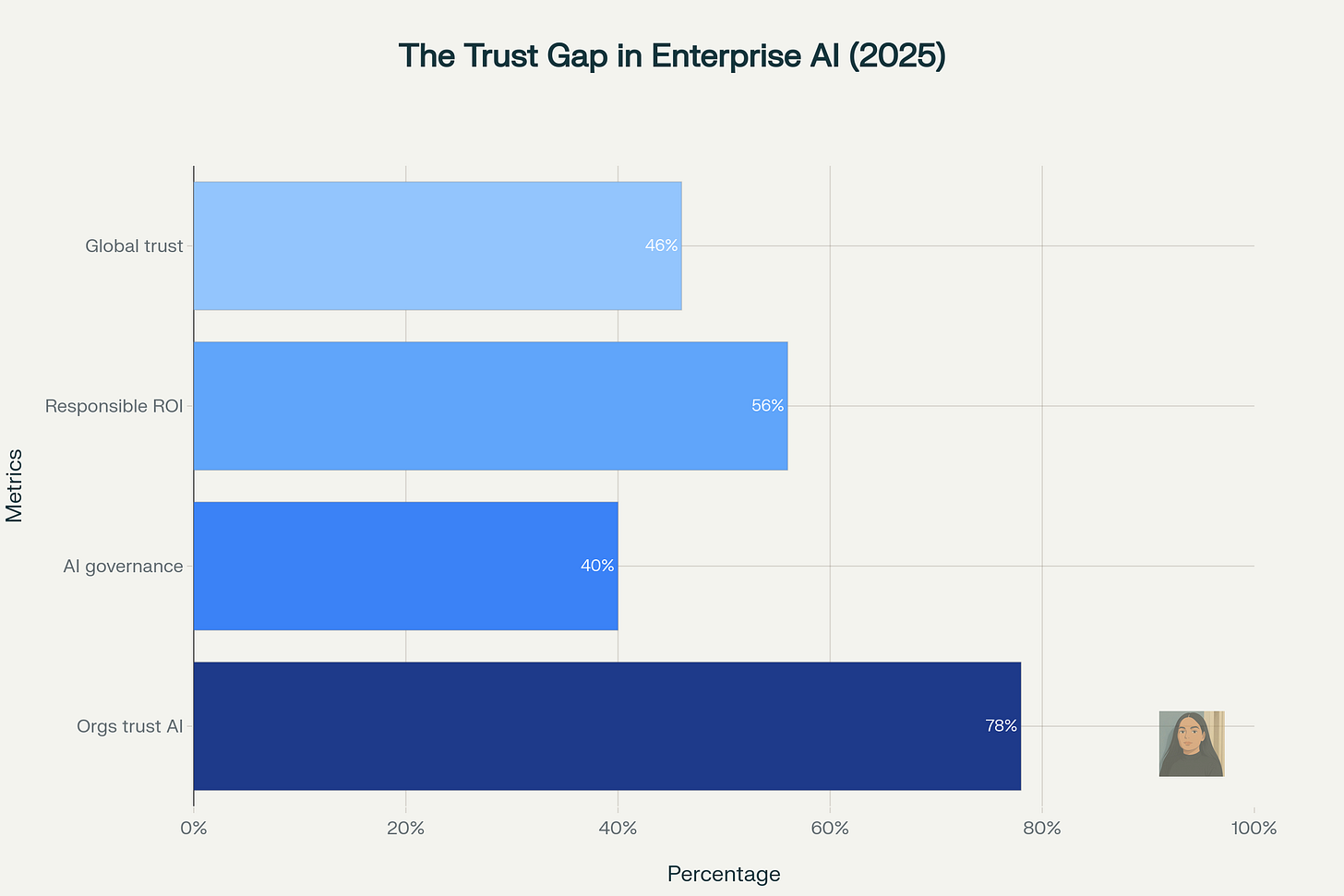

The Trust Gap: 78% of organizations “claim” to trust AI, but only 40% actually have governance structures to support it.

Better Outcomes: Companies with responsible, trustworthy AI practices have 28% fewer failures, much faster time-to-value, and stronger customer and stakeholder trust.

What Makes AI “Trustworthy”?

Trustworthy AI means more than just safe technology. It requires a combination of technical, ethical, and organizational practices. Key elements and core principles include:

Fairness: AI should not discriminate or reinforce existing biases. It’s critical to ensure diverse, representative data and to actively check for unfair outcomes.

Transparency & Explainability: Both how the AI works (“transparency”) and why it makes certain decisions (“explainability”) must be clear. It should not be hidden in a “black box.” If users and stakeholders can’t understand or audit an AI’s choices, trust breaks down.

Accountability: Clear human oversight and responsibility must be assigned for AI outcomes. This includes being able to challenge and redress automated decisions that may cause harm.

Safety & Security: AI systems should be robust, reliable, and designed to do no harm. This includes defending against hacking, errors, and unpredictable failures.

Privacy: Protect user and company data, in full compliance with laws and with clear boundaries on how data is used.

Governance: Establish structures, policies, and continuous oversight to ensure responsible AI use throughout the entire system’s lifecycle.

Industry Trends and AI Adoption

AI impact and trust issues however play out differently depending on the sector. for example,

Healthcare: AI diagnoses and personalized medicine offer big gains but face high scrutiny for fairness, explainability, and safety.

Manufacturing: AI boosts quality control and predicts equipment failures, but scaling up is often blocked by data-quality and governance issues.

Finance: Automation enables faster credit checks and fraud detection, but requires transparency and explainability for regulators and customers.

Retail & Marketing: AI-powered personalization can drive higher conversion rates, if done responsibly, ensuring customer data is protected and bias is avoided.

Key Data Points (2025)

Enterprise Use: 78% use AI in at least one business function; 87% of large firms deploy AI widely.

Scaling Success: Only 3% of companies get AI systems working at scale.

ROI & Efficiency: Those who do achieve 34% efficiency gains and 27% cost reductions within 18 months.

Skills Gaps: 73% of firms cite lack of AI-ready, trustworthy data as their #1 challenge; skills shortages remain a huge bottleneck.

Public Perception: Only 46% of people are willing to trust AI globally.

Governance Gap: 40% of companies have formal AI governance, but 78% claim “full trust.”

How to Build Trustworthy AI?

so whats could pave the path to building trustworthy AI - well its not really very different from what actually defines it. -

No poor data, not bad algorithms

Most AI failures stem from poor data, not bad algorithms. Fixing data governance and ensuring diversity and accuracy which means investing in quality data is the foundation.

Don’t just talk about “fairness” and “transparency”

Implement Clear, Measurable Ethics - Don’t just talk about “fairness” and “transparency” measure them. Track bias across demographics, audit explainability scores, and create feedback channels for users to challenge decisions.

Don’t view AI as a replacement for people

Establish Human-AI Collaboration. 44% of leaders see the best results come from systems designed for human-AI teamwork.

Explain both “how” and “why” of AI

Make AI Transparent: Explain both “how” the AI works and “why” any given decision is made. Documentation and interpretability are vital for trust.

Set up roles to monitor AI

Ensure Oversight and Redress: Set up committees or roles to monitor AI behavior, and ensure people have clear ways to challenge or appeal automated decisions.

Review, Review, Review

Continuously Monitor and Upgrade: Keep reviewing your AI’s outcomes, update it based on feedback, and adapt policies as standards (and regulations) evolve.

Trustworthy AI isn’t just about creating smarter technology; it’s about developing systems that people, customers, and regulators can rely on and believe in. The leading companies in AI aren’t just the ones with advanced tools, but those championing responsible, transparent, and human-centered approaches. To unlock the true promise of AI, organizations must prioritize building trust at every step through thoughtful design, robust policies, and a culture of responsibility.

Before moving ahead with ambitious AI projects, every organization should perform an honest evaluation:

Are your AI systems governed with clear accountability, explainable to stakeholders, and demonstrably fair?

Do your teams have the necessary skills and access to reliable, high-quality data?

Achieving success with AI will depend less on the size of the technology budget and more on the ability to bridge the trust gap. Companies that focus on trust will move faster, experience fewer failures, and reap far greater rewards from their AI investments. This is the foundation for sustainable, scalable, and truly transformative AI.